- By Dave Vasko

- June 03, 2021

- Rockwell Automation

- Feature

Summary

Exploring the application, limits and myths of data analytics.

Connected factories are no longer the future of manufacturing, they are the present. Across industries and enterprise size, companies have implemented connected systems to give them greater insights into their production, capacity, and efficiency of their production lines. The data allows them to reduce waste, hone workflows and capacity. However, even with the vast amount of data now available with the Industrial Internet of Things (IIoT), factories only operate within a small range of their possible capability. During the COVID-19 pandemic, many people were shocked to realize just how much hidden capability they have in their plants, as they were forced to pivot to different industries or scale up to meet demand.

Why are manufacturers generating so much data yet still only using a fraction of it? It’s because where decisions are made is as important as how decisions are made.

There is value in solving problems at device, control, edge and cloud. The right balance rests not in the tools or technologies, but in each use case.

What are you missing?

Cloud users typically see only an extremely small portion of the available information.

Typically, you will only see a small set of possible data values and internal device data, process, control and device models aren’t visible at all. Moreover, the further away you are from the source of the data, the less often you see updates to the data–meaning intermediate data values might be missing.

As a result, people who rely solely on the cloud see only an extremely small portion of the available information. When you gather and analyze data close to the source you get real-time feedback; without it, you might build a model or simulation with limited capabilities and scope–because you don’t know what you don’t know. You need information that allows you to understand the real capability, not just the typical observed capability.

Yet, this isn’t as simple as only relying on an edge solution.

The potential at the plant level, as well as the complexity of the operation and the industries served, means that analytics, artificial intelligence, and machine learning cannot be applied generically. Analytics can be a powerful tool when used in conjunction with libraries and domain experts who understand and can unlock unseen value and opportunities.

Exploring the application, limits and myths of data analytics

Data analytics provide an opportunity for process optimization and future predictions based on observed behavior–but it does not provide a complete picture. You will only see data you can observe, so capabilities and limitations typically aren’t visible, and status may not be available.

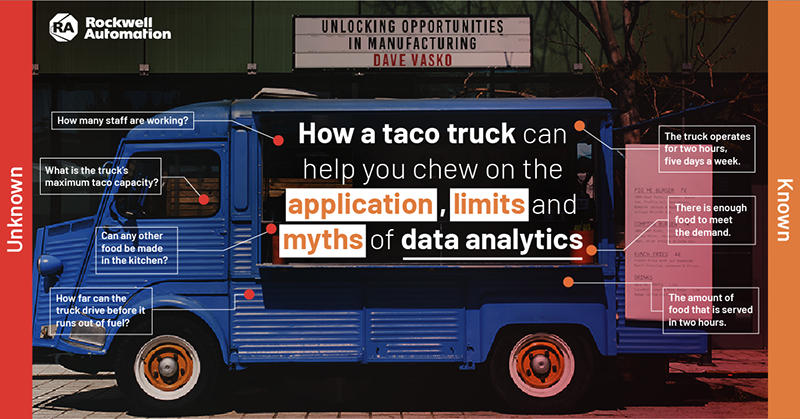

Take the example of a parked taco truck and what we know about it. It operates for two hours, five days a week. We can observe how many tacos are served in that time, and the orders-placed-to-orders-fulfilled time.

What we can’t observe is the truck’s capabilities: does it have mobility? At what range and speed? Is it properly maintained? What are the capabilities of the kitchen? Can other food or beverages be prepared? What is the maximum number of meals that could be served? How many hours a day could the truck operate?

We also don’t know about the staff: how many hours could they work? Do they have training to prepare other recipes? What is the cost of raw materials? Is the truck is making a profit?

Companies must understand what limits there are on the data they are analyzing and work that into their models. Consider where additional data could be collected and included to give a more robust picture of the situation.

Real world applications are complex

The taco truck is a simple example that shows how most companies are dealing with a mixture of data and models, and much of the time the details are not readily available. This is particularly true when trying to examine the data remotely. It may be impossible to reconstruct the underlying models that describe the real operation.

The multiple challenges around data availability include the sampling rate, which can take time to reach a cloud application, and the way data is expressed. A variable could have a range of 0-1,000, but only be expressed in values between 3-8 during operation. Data availability may also be limited by network communication resources, resulting in huge gaps in data.

Big data will only show you things you’ve tried

The cloud’s value is in specific types of problems–data sharing across ecosystems and the supply chain, data aggregation and visualization when real time action is not needed (energy monitoring), high compute applications, machine learning model development and training, just to name a few.

If you want to know more and do more than your current production, it’s better to start with analytics to gain insight into where problems exist. Armed with the insight into problems and how you can quantify them you can then leverage deep domain knowledge to start to create a strategy to address the most significant issues. This typically means adding additional sensors or building models to create the solution. Models can be critical to exploring solutions you have never tried before. This is how you find the things you’ve never tried or thought about. This is where the creativity comes into the equation.

Use the tools that are appropriate for the situation. It’s not all or nothing–edge, cloud or even a hybrid solution–that’s important. Rather, you need to understand the problem you are trying to solve and the outcome you are trying to achieve, and then apply the right modeling and simulation using a complete picture of the data–not just what you already know.

Assess your needs and identify the best fit

There is value in solving problems at device, control, edge and cloud. It is crucial to find the right balance to unlock the full potential of a factory. The right balance is applying the right tools or technologies, guided by deep domain knowledge, for each use case.

About The Author

Dave is director of Advanced Technology at Rockwell Automation. He is responsible for applied R&D and Global Product Standards and Regulations within Rockwell. He is responsible for developing and managing technology to enable the future of industrial automation, this includes Augmented Reality, Artificial Intelligence, Digital Twins, Digital Transformation, IoT, and Collaborative Robotics. Dave participates in the National Science Foundation (NSF) workshops on Advancing The Adoption Of Artificial Intelligence (IA) In Advanced Manufacturing (And Maximizing Its Value For U.S. Industry), is co-chairing the next data-sharing workgroup, and is a member of the World Economic Forum Workgroup on data sharing.

Did you enjoy this great article?

Check out our free e-newsletters to read more great articles..

Subscribe