- By Eduardo Hernandez

- October 10, 2023

- TrendMiner

- Feature

Summary

Despite the apparent complexity of machine learning, only a handful of algorithms serve as the basis for all soft sensor models.

The algorithms behind machine learning have been around for thousands of years, but soft sensors have gained prominence only in the past three to four decades. They often rival their digital counterparts and sometimes even outshine them. Yet, their development remains a mystery to many who are overwhelmed by the apparent complexity of machine learning.

Meanwhile, there’s a growing need for immediate or near-immediate measurements of critical parameters to improve operational performance. This has led to a greater need for soft sensors and increasing cooperative efforts between engineers and data scientists to create them. Those engineers who are just starting out with machine learning will be relieved to know that soft sensor design is not as complex as it first appears. In fact, there are only a handful of algorithms used for their design.

What is a soft sensor?

Soft sensors play various roles in a manufacturing environment. They include:

1. The helper: When there’s a shortage of workers, especially in labs, soft sensors rise to the occasion. They are capable of estimating viscosity, molecular weight and composition to fill in when manual intervention is not possible.

2. The substitute: In environments that are tough on equipment, digital sensors can fail. Soft sensors, on the other hand, offer a continuous flow of readings. They act as placeholders until their digital counterparts are back online.

3. The double: Sometimes processes require more monitoring or lack adequate digital sensors of their own. In these cases, soft sensors are used to bridge digital gaps.

Unlike digital sensors, soft sensors are developed using a computer programming language as part of a machine learning exercise. Machine learning is a cyclical process. It starts with purifying data, then choosing an appropriate algorithm, and finally diving into the training phase. The trained model is tested before deployment. This cycle begins anew with each iteration aiming for a more refined outcome.

For the most part, there are two main types of machine learning models. One is an unsupervised model, which is primarily used to describe the relationship between or among multiple variables. The other is a supervised model, which requires a labeled dataset to make comparisons with other variables. The supervised model is a better choice for developing soft sensors or creating predictive tags. There are hundreds of supervised machine learning models, but only those from a category known as regression algorithms are useful for creating soft sensors.

Model appearance

1. Linear regression: This is the simplest approach. Some tasks, such as determining the viscosity of certain materials, may be too complex for a linear regression. But this model shines because of its simplicity. If you need to figure out which variable has the most effect on the outcome, a linear regression will do the job. Known as feature importance, it is easy to determine which of the variable or variables (X) have the greatest effect on the target (Y) in a linear regression model.

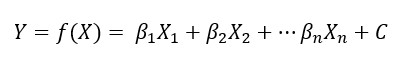

In the formula example, this linear regression model demonstrates the definition of a machine learning model to a process engineer. It generates a function that predicts the value of a target variable (Y) as a function of a linear combination of one or more variables (Xs). When using one variable, it is called a univariate linear regression, while multiple variables give it the name multivariate linear regression.

The weights inside the equation, signified by Bn and known as the slope in the case of a univariate linear regression (y = mx + b), are determined using the Least Squares Method (LSM). It’s possible to estimate some parameters of a chemical process using a linear regression model.

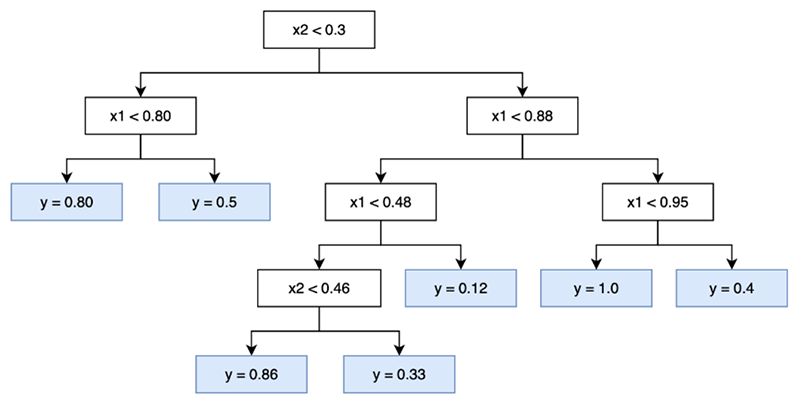

2. Decision tree: Picture a tree in which each branch signifies a rule derived from data, as shown in Figure 1. A decision tree can have as many branches as it needs to fit the data. However, its adaptability is both its strength and its weakness. Being too malleable also makes the decision tree model susceptible to overfitting data, where the model becomes too attuned to data noise and can no longer be used as a soft sensor. Conversely, if it’s not trained enough, it might miss essential patterns. This leads to underfitting the data. Overfitting and underfitting data are not unique to the decision tree model.

3. Random forest: This is a group of decision trees with each contributing toward the prediction. A random forest can provide a richer perspective, but overfitting is a concern.

4. Gradient boosting: A gradient boosting iteratively refines its prediction while using multiple decision trees. The key difference between this and other tree models is that each tree is specifically designed to correct the errors of its predecessor. While powerful, the layers of decision trees can make this model somewhat challenging to use and especially as it grows in complexity.

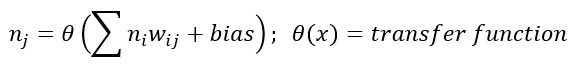

5. Neural network: A journey to the realm of deep learning leads to the neural network. Imagine a vast web, where each connection point processes a piece of information. Neural networks, particularly multi-layer perceptrons (MLP), operate with multiple layers of these connection points, or neurons, as shown in Figure 2.

The value of the weighted inputs within each neuron inside the hidden layer are added up and passed through an activation function (such as the sigmoid function). This function makes the model non-linear. Once the function makes its way through the model, it reaches the output layer containing a single neuron. Weights and biases that best fit the features and target values are determined while the model is being trained, as shown in Figure 3.

Closing thoughts

In the race to achieve real-time measurements, engineers are collaborating with data scientists to create soft sensors at a growing rate. By learning only a handful of regression models, operational experts can contribute directly not only to the development of soft sensor models, but to the operational improvements that they help uncover.

Did you enjoy this great article?

Check out our free e-newsletters to read more great articles..

Subscribe